Raspberry Pi Cluster

December 23, 2014

After working on it for a little while, I finally got my 32-node Raspberry Pi cluster working. We had a bunch of them sitting around, and I thought the best use for them would be to cluster them together. Other have done this before, and the instructions from Southhampton were really useful.

Components I used:

- 32 Raspberry Pi B models.

- 31 SD cards which are 8 gigs large.

- 1 SD card which is 128 gigs. This is for the "head node" which stores all user files on it. Files can be accessed on the other nodes by using the Network File System (NFS). Because all files are stored on one node, I splurged on a bigger drive for that node.

- 32 USB power cords.

- 5 USB power hubs with 7 input ports each. This gave enough power for the 32 Pis.

- A 48-port Ethernet switch so the Pis can talk to each other.

- A home Linksys wireless router. Because the switch is a "dumb hub", it does not actually do routing (switches that do are much more expensive). In order to allow the Pis to communicate over IP, we need a router. So the switch plugs into the router, and the Pis each plug in to the switch. Not the fanciest way to do things, but it works!

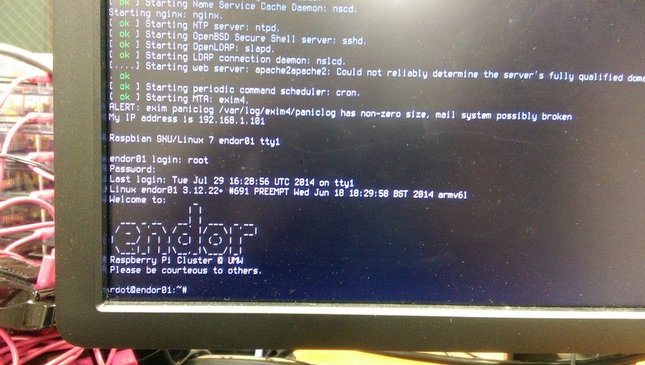

Setting up the SD cards properly was the tricky part. I used the standard Raspian OS as a base. The head node is configured specially as it serves as NFS server and also the LDAP server. An LDAP server allows the cluster to keep user name and password configuration in one place. So you can log into any of the 32 nodes with the same credentials, and we don't have to add users on all 32 nodes (which would be awful). NFS allows for users to access their files no matter which node they are on too.

The other 31 nodes are almost identical. They are NFS and LDAP clients, and have all of the programs and packages I thought would be useful. After creating one image, I cloned it and duplicated across the other 30 nodes. The tricky part was setting their host names and IP addresses in /etc/hostname, /etc/hosts and /etc/network/interfaces. I could not think of a reasonable way to automate this, so I edited these files each by hand. The host name of the cluster is "endor" with nodes being named "endor01", "endor02: etc.

Here is the front of the cluster. It is not fancy, the Pis are just rubber-banded together and are sitting on top of the switch:

The back is even uglier :/

But it works!

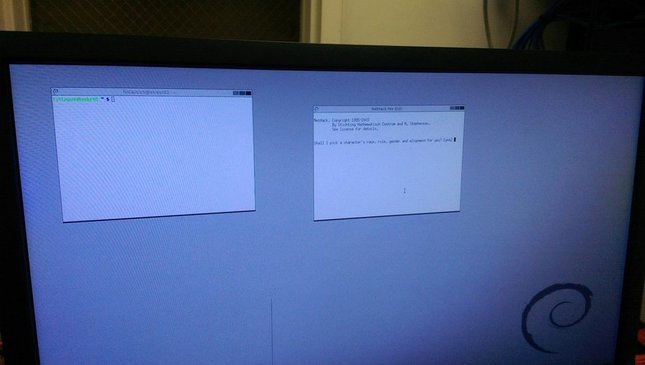

Gui as well :)