Reductions & Parallel For

Reductions

An OpenMP sum program with a critical section is as follows:

#include <stdlib.h>

#include <omp.h>

#include <stdio.h>

#define THREADS 32

#define START 0

#define END 100000

/* a global variable */

unsigned long sum = 0;

/* the function called for each thread */

void sum_part() {

/* get our thread id */

int id = omp_get_thread_num();

/* calculate the start and end points by evenly dividing the range */

unsigned long start = ((END - START) / THREADS) * id;

unsigned long end = start + ((END - START) / THREADS) - 1;

/* the last thread needs to do all remaining ones */

if (id == (THREADS - 1)) {

end = END;

}

/* do the calculation */

unsigned long i;

for (i = start; i <= end; i++) {

#pragma omp critical

sum += i;

}

}

int main() {

#pragma omp parallel num_threads(THREADS)

sum_part();

/* now all results are in */

printf("Final answer = %lu.\n", sum);

return 0;

}

This works, but the fact that multiple threads cannot add at the same time defeats any performance gain for this program.

There are a few alternatives:

- Have each thread use its own local sum, then add them in main.

- Have each thread use its own local sum, then have each thread add it into a global sum in a critical section.

- Use a reduction!

A reduction is an operator and a variable. It tells OpenMP that all of the results should be combined according to the operator.

Below is a version of the sum program that uses a reduction:

#include <stdlib.h>

#include <omp.h>

#include <stdio.h>

#define THREADS 32

#define START 0

#define END 100000

/* the function called for each thread */

unsigned long sum_part() {

/* get our thread id */

int id = omp_get_thread_num();

/* calculate the start and end points by evenly dividing the range */

unsigned long start = ((END - START) / THREADS) * id;

unsigned long end = start + ((END - START) / THREADS) - 1;

/* the last thread needs to do all remaining ones */

if (id == (THREADS - 1)) {

end = END;

}

/* do the calculation */

unsigned long i, sum = 0;

for (i = start; i <= end; i++) {

sum += i;

}

return sum;

}

int main() {

unsigned long global_sum = 0;

#pragma omp parallel num_threads(THREADS) reduction(+:global_sum)

global_sum += sum_part();

/* now all results are in */

printf("Final answer = %lu.\n", global_sum);

return 0;

}

The reduction is specified on the "#pragma omp parallel" line. It consists of some operator from (+ * - & | ^ && ||) and some variable, separated by a colon.

The goal of a reduction is to tell OpenMP that the operator needs to be applied for each thread individually, but can be done in any order.

Associativity of Operators

Integer addition is associative which means we can add the numbers in any order and get the same results. No matter which order OpenMP performs the additions, we will get the same result.

Subtraction is not associative which makes its use as a reduction operator limited.

Floating point operations are actually never associative. Different orders can introduce different rounding errors.

The following program is likely to produce different results in multiple runs:

#include <stdlib.h>

#include <omp.h>

#include <stdio.h>

#define THREADS 32

#define START 0

#define END 100000

/* the function called for each thread */

float sum_part() {

/* get our thread id */

int id = omp_get_thread_num();

/* calculate the start and end points by evenly dividing the range */

unsigned long start = ((END - START) / THREADS) * id;

unsigned long end = start + ((END - START) / THREADS) - 1;

/* the last thread needs to do all remaining ones */

if (id == (THREADS - 1)) {

end = END;

}

/* do the calculation */

unsigned long i;

float sum = 0;

for (i = start; i <= end; i++) {

sum += i;

}

return sum;

}

int main() {

float global_sum = 0;

#pragma omp parallel num_threads(THREADS) reduction(+:global_sum)

global_sum += sum_part();

/* now all results are in */

printf("Final answer = %f.\n", global_sum);

return 0;

}

This is usually not a problem as floating point values are approximations anyway.

Parallel For

Because for loops are a common structure in programs, and are easily parallelized, OpenMP provides explicit support for them.

This is done with the parallel for directive:

#pragma omp parallel for num_threads(N)

for (...) {

/* body */

}

OpenMP will automatically split the iterations of the loop amongst the N threads as evenly as possible. The simplest way to write our sum program in OpenMP is as follows:

#include <stdlib.h>

#include <omp.h>

#include <stdio.h>

#define THREADS 32

#define START 0

#define END 100000

int main() {

unsigned long sum = 0, i;

#pragma omp parallel for num_threads(THREADS) reduction(+:sum)

for (i = START; i <= END; i++) {

sum += i;

}

printf("Final answer = %lu.\n", sum);

return 0;

}

OpenMP parallel for directives make parallelizing existing programs very easy! We do not even have to divide the range ourselves, OpenMP will handle it automatically.

Parallel For Code Breaking Example

The code breaking example is also better accomplished with a parallel for loop:

/* try each possible shift amount */

int found = 0, i;

#pragma omp parallel for num_threads(26) reduction(||:found)

for (i = 0; i < 26; i++) {

found = found || break_code(encrypted, length, i);

}

if (!found) {

printf("Text could not be cracked with this method!\n");

}

This is a better method since we can now adjust the number of threads and have the work automatically re-distributed amongst the threads.

Note we also use a reduction with the || operator to see if any of the threads are able to decrypt the text.

Caveats

There are some limitations of the parallel for directive, however:

- Only for loops are supported. While loops and do/while loops can not be parallelized automatically, they would need to be re-written as for loops.

- The number of iterations must be known exactly when we enter the loop.

- The types of the loop control variables must be integers, and they cannot be modified inside the loop.

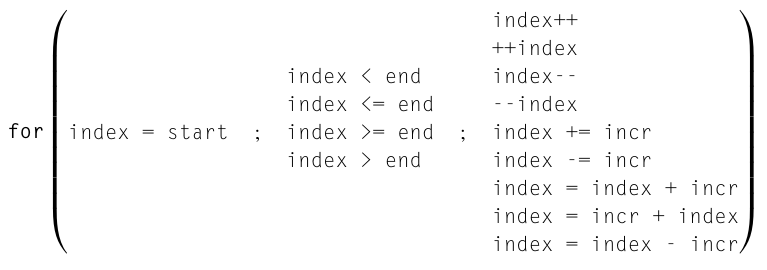

The for loop must be in "canonical form":

These restrictions are to allow OpenMP to be able to automatically calculate the number of iterations per thread, and the start and end points for each thread.

For example, the following for loops can not be parallelized with OpenMP parallel for directives:

for (i = 0; i < SIZE; i++) {

i = function();

}

for (i = 0; i < SIZE; i++) {

if (array[i] == item) {

break;

}

}

for (curr = head; curr != NULL; curr = curr->next;) {

/* do something */

}

for (i = 0.0; i < 100.0; i += 3.5) {

/* do something */

}

for (i = 1; i < 1000; i *= 2) {

/* do something */

}

We are allowed to call exit() in a loop, however.

Loop-Carried Dependence

The following program uses an OpenMP parallel for loop to compute the first 100 Fibonacci numbers:

#include <stdlib.h>

#include <omp.h>

#include <stdio.h>

#define THREADS 20

#define N 100

int main() {

/* an array of fibonacci numbers */

unsigned long long fibs[N];

/* base cases */

fibs[0] = fibs[1] = 1;

/* compute the rest */

int i;

#pragma omp parallel for num_threads(THREADS)

for (i = 2; i < N; i++) {

fibs[i] = fibs[i - 1] + fibs[i - 2];

}

/* print them */

for (i = 0; i < N; i++) {

printf("%llu ", fibs[i]);

}

return 0;

}

The for loop abides by the form outlined above, but this program is non-deterministic. Why?

The reason is that there is a loop-carried dependence from one iteration of this loop to the next. The first iteration has to be completed before the second, the second before the third, and so on.

OpenMP will not catch this kind of issue, and a loop with such a dependence cannot be easily parallelized.