Algorithm Analysis Part 3

Finding the Order of an Algorithm

There are a couple of rules for finding the order of an algorithm:

- If you do two parts in sequence (one after the other), then you add them together.

- If you do something inside of a loop, then you multiply it by how many times the loop runs.

For example, the following method finds the biggest item in an array:

int max(int[] array) {

int max_so_far = array[0];

for(int i = 1; i < array.length; i++) {

if(max_so_far < array[i]) {

max_so_far = array[i];

}

}

return max_so_far;

}

So for this one, we have three parts in sequence, so we will add them together. Then we need to multiply the steps done in the loop by how many times the loop will run (which is the length of the array - 1).

If we count the first and last instructions as 1 step, and the body of the loop as 3 (the if statement, the incrementing of i, and the check if we continue), then we have:

$f(n) = 3 \cdot n + 2$

Which is simplified to:

$O(n)$

Most times we won't bother to count the steps at all when doing this. We just find the loops and use them to determine the order. If there are no loops, then it must be $O(1)$.

More Examples

The following algorithm prints a multiplication table of a given size:

void printMultTable(int size) {

for(int i = 0; i <= size; i++) {

for(int j = 0; j <= size; j++) {

System.out.print(i * j + " ");

}

System.out.print("\n");

}

}

This has nested loops. Following the rule that we have to multiply what's done in the loop by how many times it executes, this algorithm is $O(n^2)$. This is because the print statement takes a constant amount of time, and we multiply this by $n$ for one loop, and then another $n$ for the other loop. In total that's some constant multiplied by $n^2$ which simplifies to just $O(n^2)$.

How about this algorithm to resize an array?

- Make a new, resized array.

- Copy the elements of the old array into the new one.

- Reset the old array to point at the new one.

This is trickier since this is written as pseudo-code instead of Java. However, thinking about it, you should agree that the only loop required is in step 2, which needs to go through the whole array once, making this $O(n)$.

Lastly, how about this algorithm to push onto an array stack?

// push on a new value

public void push(Type item) {

top++;

array[top] = item;

}

Because there are no loops, this must be $O(1)$.

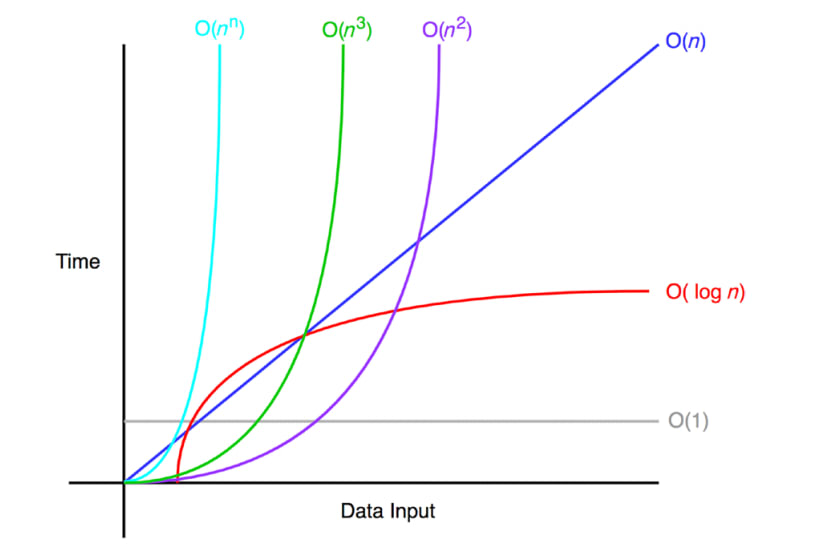

Comparison of Complexities

So far we have seen algorithms with $O(1)$, $O(n)$, and $O(n^2)$ complexity. By the way, "complexity" refers to how the steps taken scale with the input. Larger Big-O values mean greater complexity.

There are other possible Big-O complexities, some of which we will see over the course of the semester. Of course the lower complexity, the less time our algorithms will need for large inputs.

The following figure shows a few common complexities compared:

We will see many more examples of algorithm analysis through the semester!